An independent AI research & engineering lab based in Austria, building the next generation of intelligent systems.

Noeum-1-Nano is a nano-scale Mixture-of-Experts (MoE) model (0.6B total / ~0.2B active parameters) trained from scratch on 18 billion tokens. It has proven its efficiency and reasoning quality by matching the capabilities of major labs’ nano-class models. Built entirely from scratch—with no pretrained weights and no inherited shortcuts—this independent effort demonstrates that innovative techniques and intelligent design ca deliver competitive capability and compete with brute-force scale.

Vision

What’s next with the right compute and support

With the right compute infrastructure and backing , we’re ready to scale this work beyond nano models.

The plan is to train a realistic-sized model with multimodality and multilingual support on 1–3 trillion tokens, focusing on recursive reasoning architectures, self-correcting pipelines for real-world reasoning, and long-context efficiency (including MuonClip and latent-attention variants). This is a step toward competing on reasoning quality per unit of compute with the largest AI labs.

Our approach remains the same: iterate and validate at nano scale with minimal compute, then scale only proven techniques to full-size models.

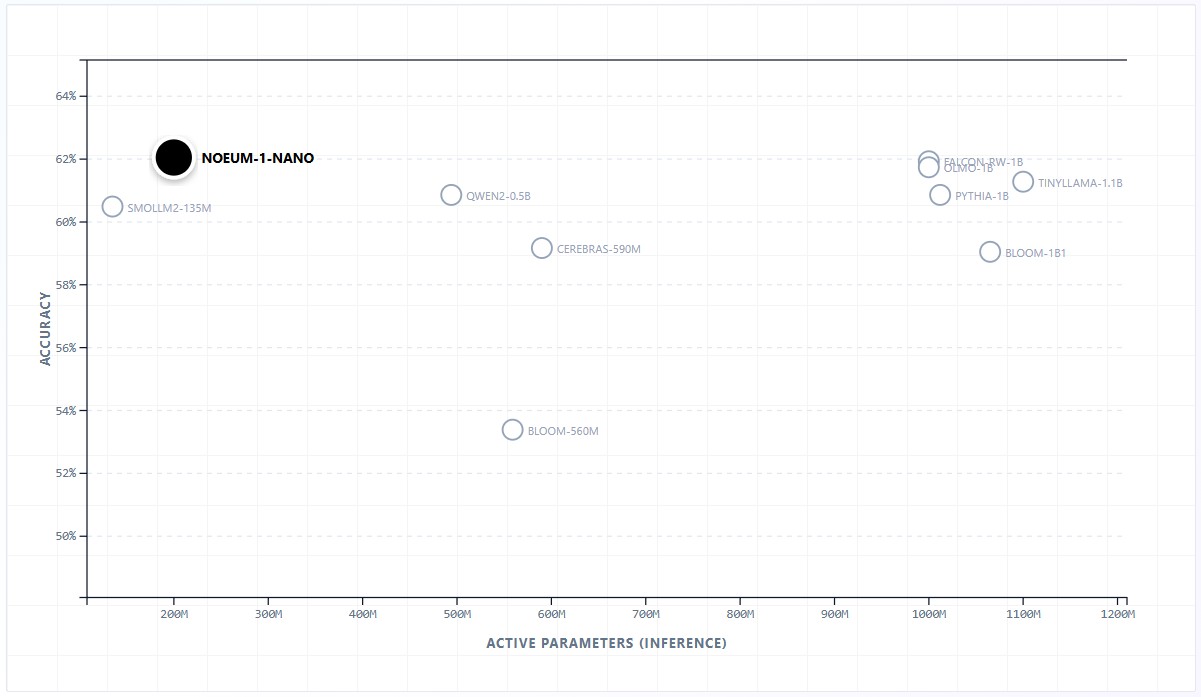

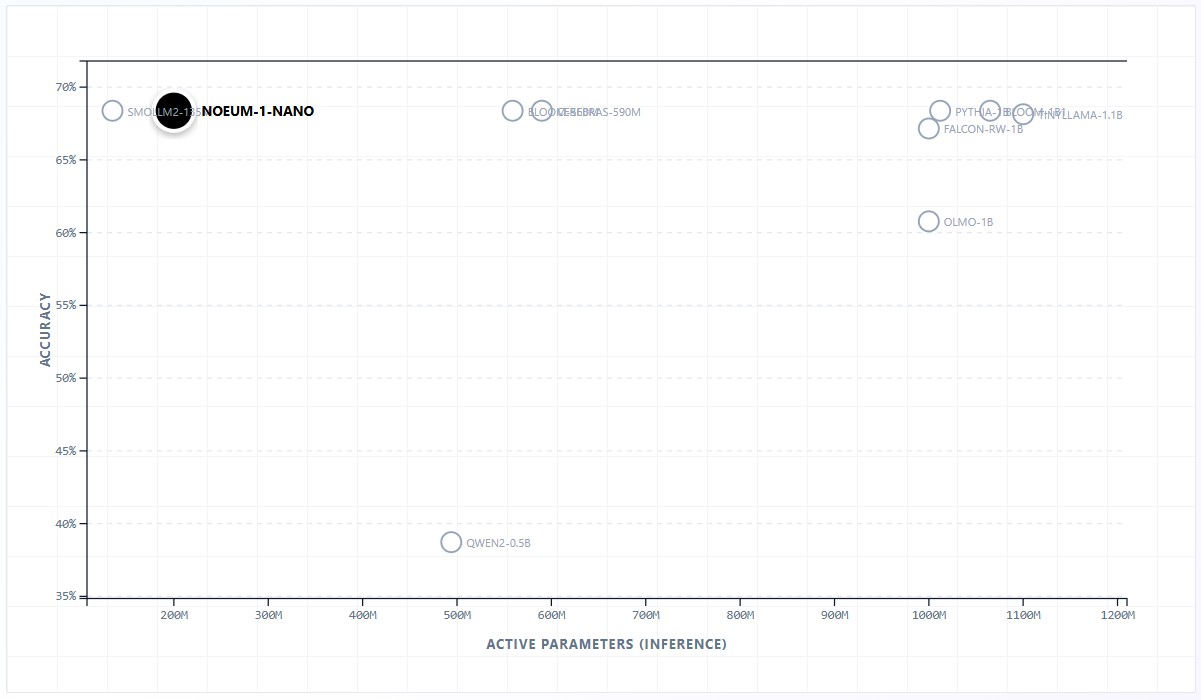

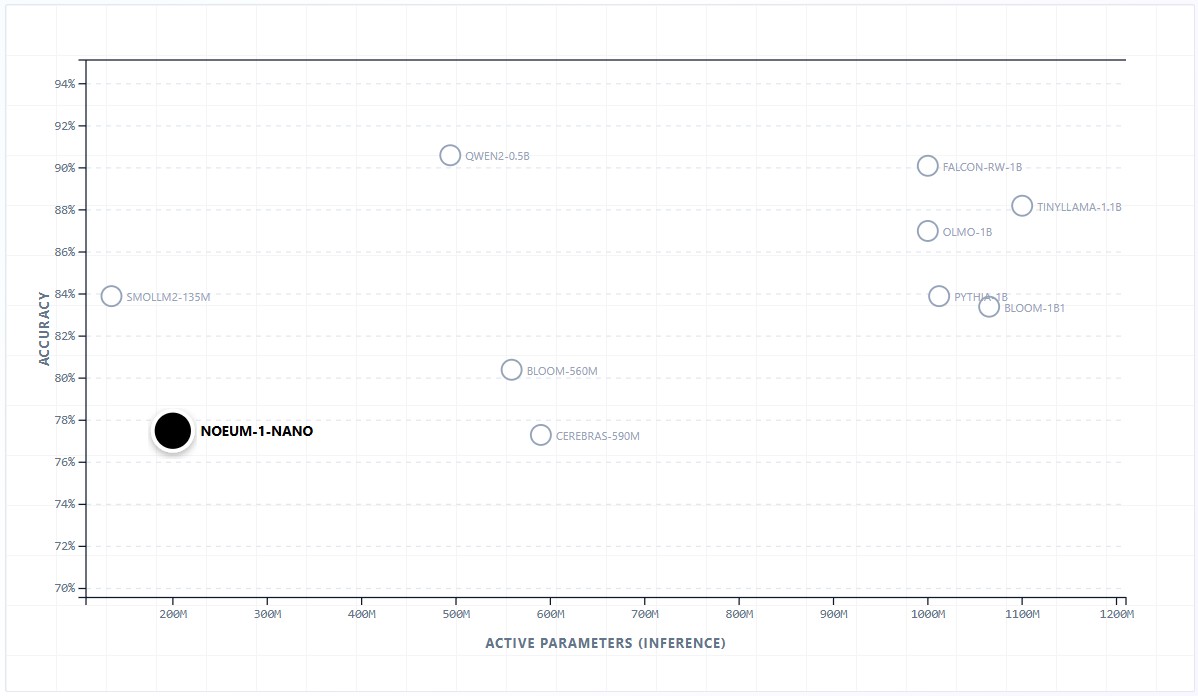

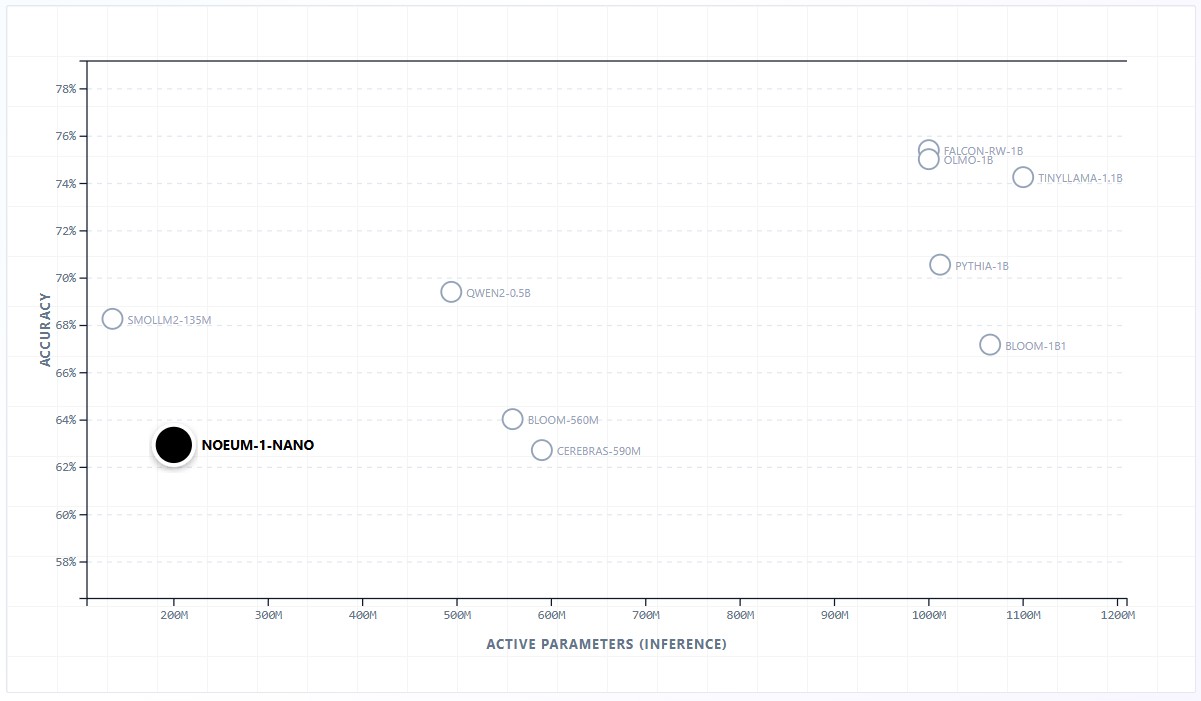

Benchmarks

Conducted with Noeum thinking mode DISABLED to ensure fair comparison

The benchmarks below demonstrate Noeum-1-Nano achieving above-average performance despite an extreme disparity in training volume. While standard models like Qwen2 or TinyLlama require 2 Trillion to 12 Trillion tokens, Noeum achieves competitive reasoning with just 18 billion high-signal tokens—a 20x to 667x data reduction. Despite this massive efficiency gap, Noeum matches or beats widely-used 1B models, securing #1 Ranks on logic-heavy benchmarks like BoolQ and MRPC.

BoolQ

Complex yes/no reasoning on challenging passages.

CB

Discourse-level entailment and clause embedding.

SST-2

Binary sentiment classification on movie reviews.

MRPC

Semantic equivalence detection in sentence pairs.

SCIQ

Physics, chemistry & biology knowledge retrieval.

PIQA

Physical reasoning and affordances.

About

Noeum.ai is an independent AI research & engineering lab based in Austria, focused on efficiency-first training and reproducible evaluation. We build models end-to-end—from pre-training to post-training and benchmarking—with the goal of improving reasoning quality per unit of compute. Founded by Bledar Ramo, Noeum.ai currently operates as a lean, self-funded effort focused on advancing novel reasoning systems—including controllable System-2 style verification, self-correcting pipelines, and architectures aimed at stronger long-context reasoning.

noeum-1-nano

We invite you to try the model in your environment, review exactly how the benchmarks were run, and reproduce or extend the results with your own evaluation suite. Comparisons against your preferred baselines—and replication feedback—are welcome.

LLMification

Transforming human knowledge from readable to learnable.

Worked examples become SFT data, practice problems become RL environments with verifiable answers, and synthetic generators produce large-scale variations from compact templates.